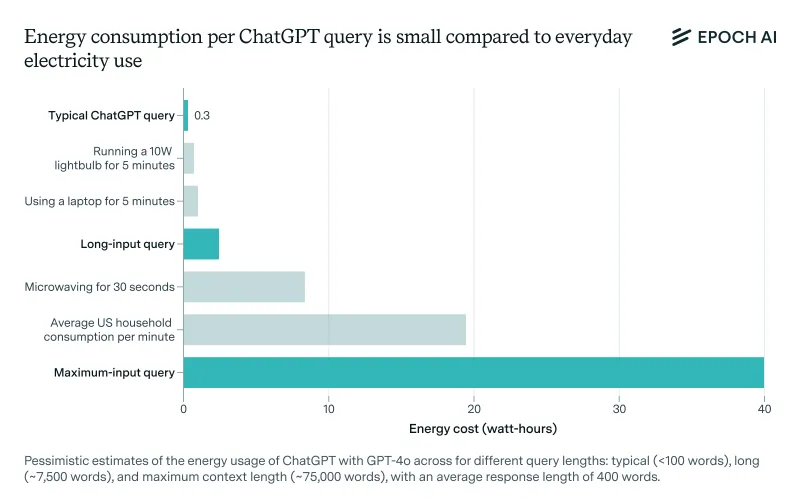

Previously, it was reported that ChatGPT (OpenAI’s chatbot platform) was excessively power-hungry. According to frequently cited statistics, ChatGPT requires approximately 3 watt-hours of power to answer a single query, which is 10 times more than a Google search.

However, its energy consumption largely depends on how ChatGPT is utilized and the AI model responding to queries. According to Epoch AI, a nonprofit AI research organization, they have attempted to calculate the typical energy consumption of a ChatGPT query and believe that previous estimates were significantly exaggerated.

Using OpenAI’s latest built-in model for ChatGPT, GPT-4o as a reference, Epoch found that an average ChatGPT query consumes about 0.3 watt-hours — less than many household appliances.

Read Also:

The energy usage of AI has become a highly controversial topic as AI companies rapidly expand their infrastructure. According to Tech Crunch, last week, a group of over 100 organizations published an open letter urging the AI industry and regulators to ensure that new AI data centers do not deplete natural resources or force utility companies to rely on non-renewable energy sources.

Although Epoch AI’s report indicates that current AI energy consumption is not as high as previously claimed, AI is expected to advance further, potentially requiring more energy for training in the future.

Additionally, despite recent breakthroughs in AI efficiency, the scale of AI deployment is predicted to drive massive and power-intensive infrastructure expansion. In the next two years, AI data centers may require nearly the entire power capacity of California in 2022 (68 GW), as reported by Rand.

By 2030, training frontier models could demand power output equivalent to eight nuclear reactors (8 GW), the report predicts.

Moreover, ChatGPT itself reaches a vast and growing number of users, leading to increasing server demands. Consequently, OpenAI, along with several investment partners, plans to spend billions of dollars on new AI data center projects over the next few years.

Isn’t it fascinating? Running a highly advanced and fast AI system requires significant energy, and one can only imagine the electricity bills OpenAI must face.

What do you think? Share your thoughts in the comments below.

Via: Tech Crunch