Last month, it was reported that Microsoft would introduce its DeepSeek model to the Cloud, and even bring the NPU-optimized DeepSeek-R1 version directly to Copilot+ PCs powered by Qualcomm Snapdragon X processors.

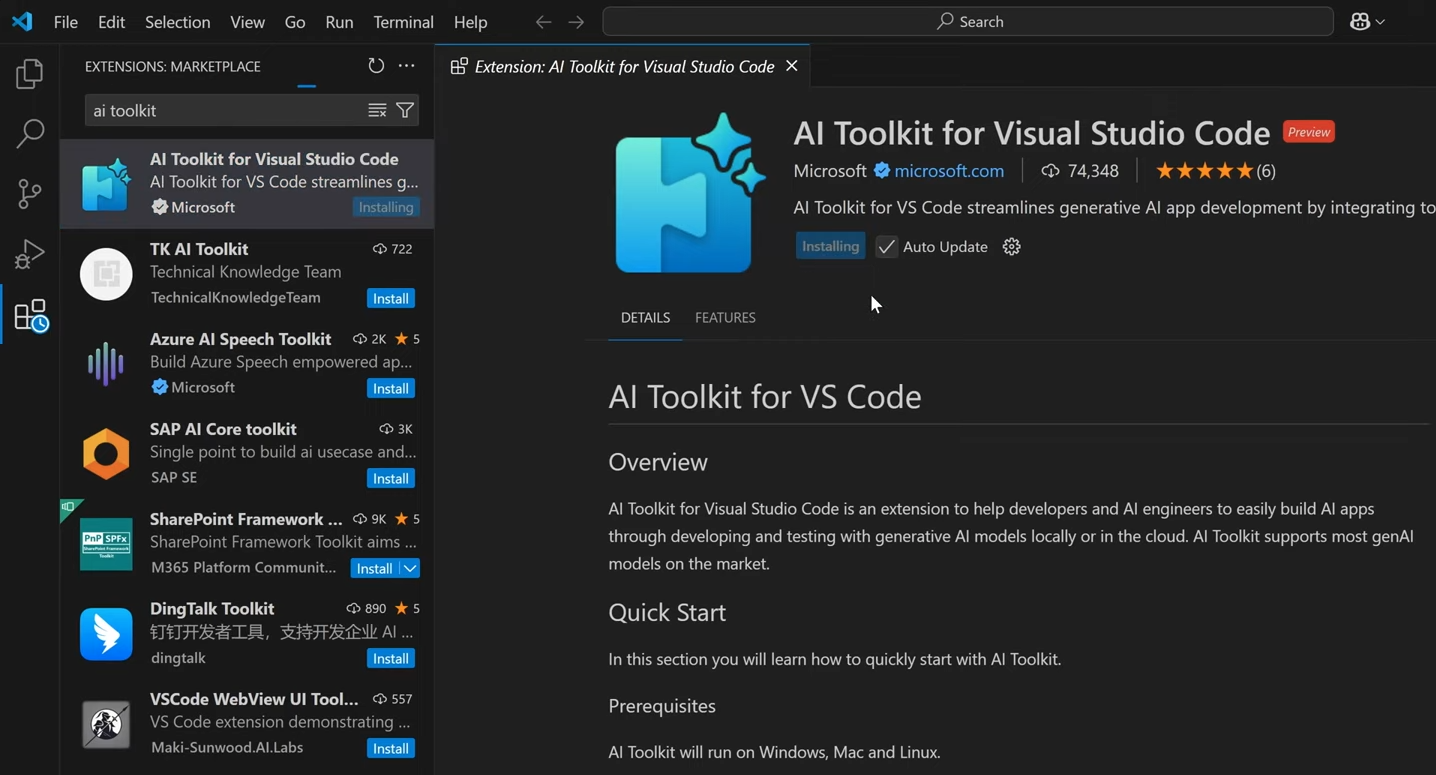

In short, back in February 2025, the first DeepSeek-R1-Distill-Qwen-1.5B became available on AIToolkit for VSCode, bringing significant improvements to the tool.

Interestingly, yesterday, Microsoft announced the availability of the distilled DeepSeek R1 7B and 14B models for Copilot+ PCs via Azure AI Foundry. With the ability to run 7B and 14B models locally on Copilot+ PCs, users can now develop various new AI-powered applications that were previously impossible.

Additionally, since these models will run on NPUs, users can expect sustained AI computing power with minimal impact on battery life and thermal performance, freeing up the CPU and GPU for other tasks unrelated to AI.

Interested developers can download and run the DeepSeek 1.5B, 7B, and 14B model variants on Copilot+ PCs through the AI Toolkit VS Code extension. The DeepSeek models are optimized in ONNX QDQ format and downloaded directly from Azure AI Foundry. Besides being available on devices with Snapdragon X processors, these models will also be supported on Copilot+ PCs powered by Intel Core Ultra 200V and AMD Ryzen processors in the future.

This is undoubtedly a significant development, especially for Copilot+ users and developers actively leveraging AI technology. With the NPU capabilities on Copilot+ devices, AI processes will be more efficient compared to PCs relying solely on CPUs and GPUs.

What are your thoughts on this? Share your opinions in the comments below.

Via : Microsoft